https://blog.apify.com/4-ways-to-authenticate-a-proxy-in-puppeteer-with-headless-chrome-in-2022/

Puppeteer with a headless Chromium browser has proven to be an extremely simple, yet powerful tool for developers to automate various actions on the web, such as filling in forms, scraping data, and saving screenshots of web pages.

When paired with a proxy, Puppeteer can truly be practically unstoppable; however, there can be some difficulties when trying to configure Puppeteer with Headless Chrome correctly to authenticate a proxy that requires a username and password.

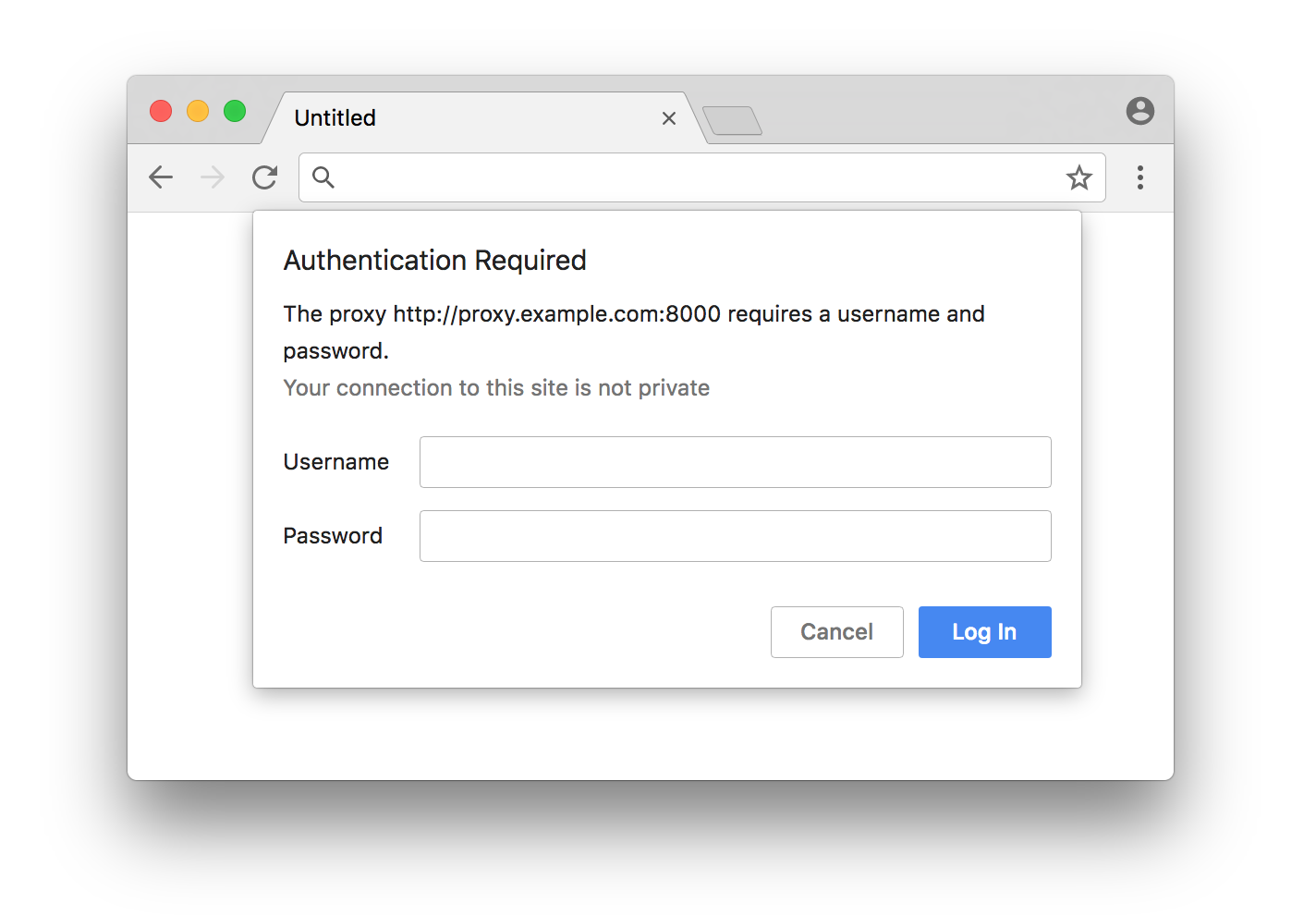

Normally, when using a proxy requiring authentication in a non-headless browser (specifically Chrome), you’ll be required to add credentials into a popup dialog that looks like this:

The problem with running in headless mode is that this dialog never even exists, as there is no UI in a headless browser. This means that other avenues have to be taken in order to authenticate your proxy. Perhaps you’ve tried doing this (which doesn’t work):

Don’t worry, we’ve tried it too. The reason this doesn’t work is because Chromium doesn’t offer a command-line option which supports passing in the proxy credentials. Not to worry, though! Today, we’ll be showing you four different (and very simple) methods that’ll help you authenticate your proxy and be right on your way:

Contents

1. Using the authenticate() method on the Puppeteer page object:

For two years now, Puppeteer has supported a baked-in solution to authenticating a proxy with the authenticate() method. Nowadays, this is the most common method of doing it in vanilla Puppeteer.

There are two key things to note with this method:

- The proxy URL must be passed into the

--proxy-serverflag within theargsarray when launching Puppeteer. - The

authenticate()method takes an object with both “username” and “password” keys.

2. Using the proxy-chain NPM package:

The proxy-chain package is an open-source package developed by and maintained by Apify which provides a different approach with a feature that allows you to easily “anonymize” an authenticated proxy. This can be done by passing your proxy URL with authentication details into the proxyChain.anonymizeProxy method, then using its return value within the --proxy-server argument when launching Puppeteer.

An important thing to note when using this method is that after closing the browser, it is a good idea to use the closeAnonymizedProxy() method to forcibly close any pending connections that there may be.

This package performs both basic HTTP proxy forwarding, as well as HTTP CONNECT tunneling to support protocols such as HTTPS and FTP. It also supports many other features, so it is worth looking into it for other use cases.

3. Within ProxyConfigurationOptions in the Apify SDK:

The Apify SDK is the most modern and efficient way to write scalable automation and scraping software in Node.js using Puppeteer, Playwright, and Cheerio. If you aren’t familiar with it, check out the docs here.

Within the ProxyConfigurationOptions object in which you provide the Apify.createProxyConfiguration() method, there is an option named proxyUrls. This is simply an array of custom proxy URLs which will be rotated. Though it is an array, you can still pass only one proxy URL.

Pass your proxy URL with authentication details into this array, then pass the proxyConfiguration into the options of PuppeteerCrawler, and your proxy will be used by the crawler.

The massive advantage of using the Apify SDK for proxies as opposed to the first method is that multiple different custom proxies can be inputted, and the rotation of them will be automatically handled.

4. Setting the Proxy-Authorization header

If all else fails, setting the Proxy-Authorization header for each of your crawler’s requests is an option; however, it does have its setbacks. This method only works with HTTP websites, and not HTTPS websites.

Similarly to the first method, the proxy URL needs to be passed into the --proxy-server flag within args. The second step is to set an extra auth header on the page object using the setExtraHTTPHeaders() method.

It is important to note that your authorization details must be base64 encoded. This can be done with the Buffer class in Node.js.

Once again, this method only works for HTTP websites, not HTTPS websites.